Machine Learning and Deep Learning Optimizers Implementation

- Description

- Curriculum

- FAQ

- Reviews

In this course you will learn:

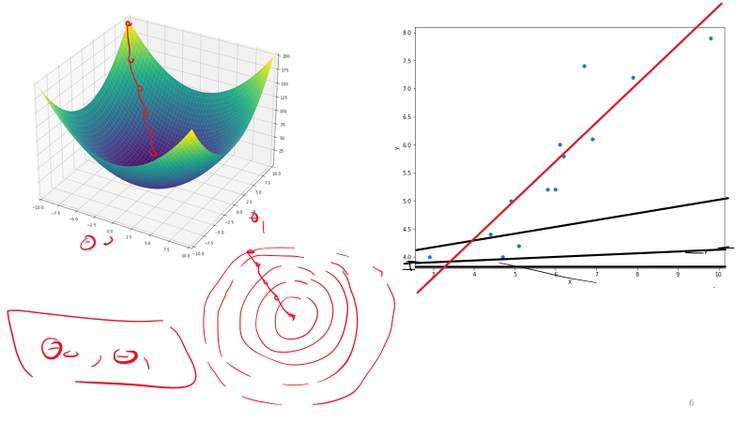

1- How to implement batch (vanilla) gradient descent (GD) optimizer to obtain the optimal model parameters of the single and Multi variable linear regression (LR) models.

2- How to implement mini-batch and stochastic GD for single and multi-variable LR models.

You will do this by following the guided steps represented in the attached notebook.

In addition a video series describing each step.

You will also implement the cost function, stop conditions, as well as plotting the learning curves.

You will understand the power of applying vectorize implementation of the optimizer.

This implementation will help you to solidify the concept and gain the momentum of how the optimizers work during training phase.

By the end of this course you will obtain the balance between the theoretical and practical point of view of the optimizers that is used widely in both machine learning (ML) and deep learning (DL).

In this course we will focus on the main numerical optimization concepts and techniques used in ML and DL.

Although, we apply these techniques for single and multivariable LR, the concept is the same for other ML and DL models.

We use LR here for simplification and to focus on the optimizers rather than the models.

In the subsequent practical works we will scale this vision to implement more advanced optimizers such as:

– Momentum based GD.

– Nestrov accelerated gradient NAG.

– Adaptive gradient Adagrad.

– RmsProp.

– Adam.

– BFGS.

You will be provided by the following:

– Master numerical optimization for machine learning and deep learning in 5 days course material (slides).

– Notebooks of the guided steps you should follow.

– Notebooks of the practical works ideal solution (the implementation).

– Data files.

You should do the implementation by yourself and compare your code to the practical session solution provided in a separate notebook.

A video series explaining the solution is provided. However, do not see the solution unless you finish your own implementation.

-

8About the LabVideo lesson

-

9Regression DataVideo lesson

-

10Model Parameters InitializationVideo lesson

-

11Model PredictionVideo lesson

-

12Cost CalculationVideo lesson

-

13Gradient CalculationVideo lesson

-

14Model Parameters UpdateVideo lesson

-

15Iterate Till Achieving the Optimum Parameter ValuesVideo lesson

-

16Optimizer OutputVideo lesson

-

17Gradient Stop ConditionVideo lesson

-

18Gradient Stop Condition OutputVideo lesson

-

19Model Performance EvaluationVideo lesson

-

20How to Obtain Better Model Performance?Video lesson

-

21Plotting of Learning CurvesVideo lesson

-

22Loss vs EpochsVideo lesson

-

23Loss vs Model ParametersVideo lesson

-

24Interpretation Loss vs EpochsVideo lesson

-

25Interpretation Loss vs Model ParametersVideo lesson

-

26Cost Convergence CheckVideo lesson

-

28Step by Step ImplementationVideo lesson

-

29Iterate Till Achieving the Optimum Parameter ValuesVideo lesson

-

30Gradient Stop ConditionVideo lesson

-

31Model Performance EvaluationVideo lesson

-

32Different Hyperparameter CombinationsVideo lesson

-

33Plotting Loss vs. EpochsVideo lesson

-

34Plot Loss vs Model ParametersVideo lesson

-

35Cost Convergence CheckVideo lesson

-

36Make Your Implementation as a Function.Video lesson

-

37Using Your Function to Explore Learning Rate EffectVideo lesson

-

39Reminder of Single Variable LR OptimizationVideo lesson

-

40Multivariable LR Optimization Problem DefinitionVideo lesson

-

41Multivariable LR Optimization Problem Solution Using Vectorize Implementation_1Video lesson

-

42Multivariable LR Optimization Problem Solution Using Vectorize Implementation_2Video lesson

-

43Vectorize Implementation_3 Gradient Vector CalculationVideo lesson

-

44Multivariable LR Optimization Problem Solution Using Vectorize Implementation_4Video lesson

-

45Vectorize Implementation_5 Generalized Implementation for Single and Multivar LRVideo lesson

-

54Data PreparationVideo lesson

-

55Step by Step ImplementationVideo lesson

-

56Putting All Steps TogeteherVideo lesson

-

57Results and Model EvaluationVideo lesson

-

58Learning Curves_1 Loss vs. EpochsVideo lesson

-

59Learning Curves_2 Loss vs. Model Parameters (Thetas)Video lesson

-

60Different Scenarios With Different HyperparametersVideo lesson

-

61Multivariable LR Optimization Implementation as a FunctionVideo lesson

-

62IntroductionVideo lesson

-

63The main idea_1_Epoch vs. iterationVideo lesson

-

64The main idea_2_Batch GD problemsVideo lesson

-

65The main idea_3_Stochastic GD (SGD)Video lesson

-

66Implementation steps 1 (Tips and Tricks)Video lesson

-

67Implementation steps 2 (Data generation and applying batch GD)Video lesson

-

68Implementation steps 3 (step by step)Video lesson

-

69Implementation steps 4 (Iterate to finish one epoch)Video lesson

-

70Implementation steps 5 (more epochs and stop condition tricks)Video lesson

-

71Implementation steps 6 (results and discussion)Video lesson

-

72Data GenerationVideo lesson

-

73Batch GD ScenarioVideo lesson

-

74Implementation steps 1 (Data Shuffle)Video lesson

-

75Implementation steps 2 (One iteration)Video lesson

-

76Implementation steps 3 (Iterate to finish one epoch)Video lesson

-

77Implementation steps 4 (More epochs)Video lesson

-

78Learning curves and model evaluationVideo lesson

-

79SGD for single variable LR as a functionVideo lesson

-

80SGD vs. batch GD using different hyperparametersVideo lesson

External Links May Contain Affiliate Links read more